Neonatal-Perinatal Health Care Delivery: Practices and Procedures

Neonatal-Perinatal Health Care Delivery 3: Practices: Growth & Nutrition, Potpourri

770 - PRAMS Pain Recognition Model classified neonatal pain with 98% accuracy

Publication Number: 770.247

Renee CB Manworren, PhD, APRN, PCNS-BC, FAAN (she/her/hers)

Director of Nursing Research & Professional Practice, Associate Professor of Pediatrics

Ann & Robert H. Lurie Children's Hospital of Chicago & Northwestern University Feinberg School of Medicine

Chicago, Illinois, United States

Presenting Author(s)

Background: Early-life pain is associated with adverse neurodevelopmental consequences. Currently, pain assessment is discontinuous, inconsistent, and highly dependent on clinician presence. Observational tools are used to assess neonatal pain. However, only the Neonatal Facial Coding System (NFCS) is associated with brain-based evidence of pain; and NFCS clinical utility is not optimal.

Objective: Our goal is to develop a continuous, unbiased, artificial intelligence (AI)-powered, clinician-in-the-loop, computational Pain Recognition Automated Monitoring System (PRAMS). In this study, nurses labeled NFCS assessment data to train a classification model. Study aims were to evaluate nurses’ inter-rater reliability (IRR) and compare model accuracy (correct predictions/total predictions), precision (true positives/true & false positives), recall (true positives/true & false negatives) and Area Under the Curve (AUC) to nurses’ AUC.

Design/Methods:

Human to artificial intelligence (H2AI), an intuitive software solution for data labeling, facilitated 6 nurses’ labeling and capture of model training data from randomly assigned iCOPEvid (infant Classification Of Pain Expression video database) videos. A pre-trained model, MobileFaceNet, was integrated into H2AI for facial detection and landmarking. Data generated in H2AI from 16 pain videos (563 frames) and 46 non-pain videos (809 frames) were then used to train a supervised computer vision model to classify pain.

Results:

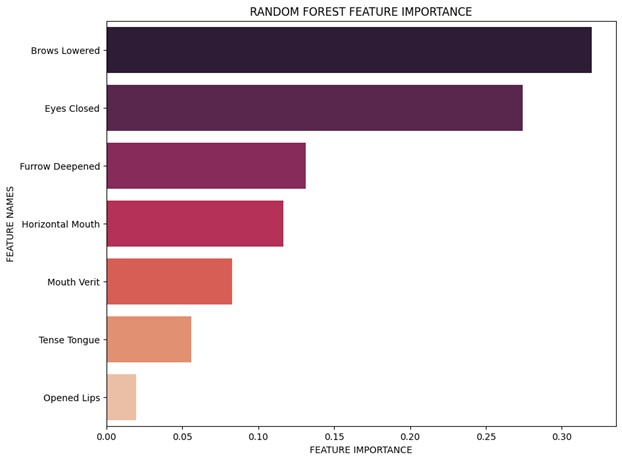

Weighted mean IRR for nurses was 71% (65-78%) for NFCS tasks, 78.5% (70-92%) for frame, and 88% (81-100%) for video pain classification. Nurse AUC 0.68 (0.59-0.74) was poor to acceptable. The correlation heatmap (Figure 1) from labeled data and the champion model feature importance graph (Figure 2) indicate the importance of brow lowering and nasolabial furrow deepening to classify pain. The champion model is highly reliable, with 98% accuracy, 97.7% precision, 98.5% recall, and outstanding discrimination with an AUC of 0.98.

Conclusion(s): Nurses’ labeled data was used to train a computer vision model to classify pain with greater accuracy, precision, and recall than humanly possible. Micro-facial expressions, such as brow lowering, require less energy expenditure. The greater importance of microfacial features over mouth features suggests we may be able to extend this model to classify pain in preterm and intubated neonates. Our next step in the development of a continuous, automated AI-empowered, clinician-in-the-loop, PRAMS is a clinical trial of this model. .jpg)