Medical Education: Medical Student

Medical Education 2: Student 1

467 - Are EPA-based Medical Student Assessments Less Prone to Implicit Bias?

Publication Number: 467.123

Rachel Poeppelman, MD, MHPE (she/her/hers)

Assistant Professor

University of Minnesota Masonic Children's Hospital

Minneapolis, Minnesota, United States

Presenting Author(s)

Background:

Implicit bias in assessment has been increasingly recognized in recent years. This not only threatens the validity of assessment data and the high-stakes decisions made based on it, but also impedes the advancement and professional development of diverse trainees. In recent years, many medical schools have introduced entrustable professional activity (EPA) based assessments, yet there are no published accounts of implicit bias in these assessments.

Objective:

To examine for implicit bias in medical student EPA assessments.

Design/Methods:

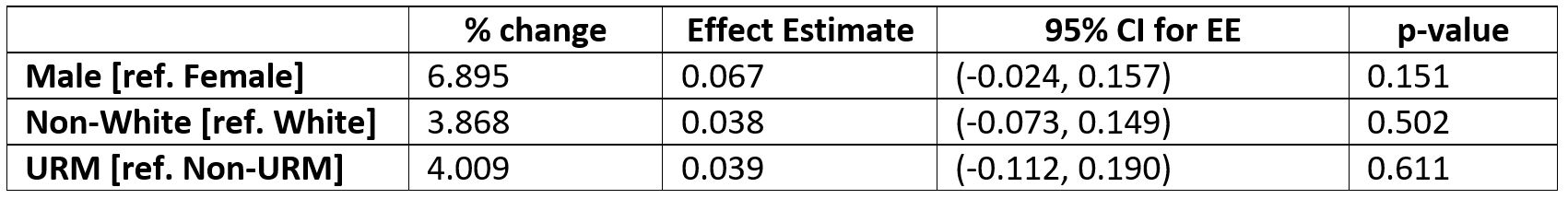

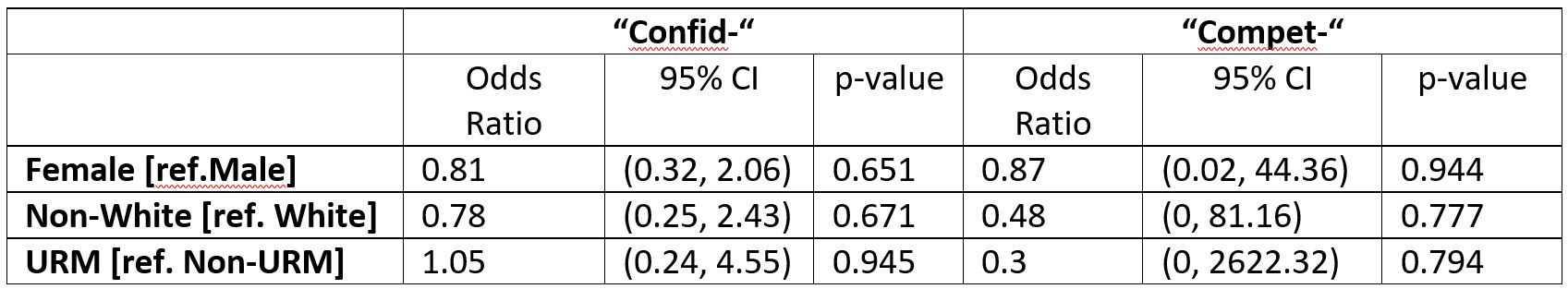

We collected de-identified EPA-based assessments of third year medical students from May to October 2021 including EPA ratings, comments and demographics. Linear mixed-effects models were fit to determine the relationship between log-total word count and student demographics including gender (male/female) and race (both white/non-white and URM/non-URM). All assessment comments were searched for references to the learner with the words “compet-“ (competence) and “confid-“ (confidence). Term use was compared by demographics with multinomial mixed effects logistic regression.

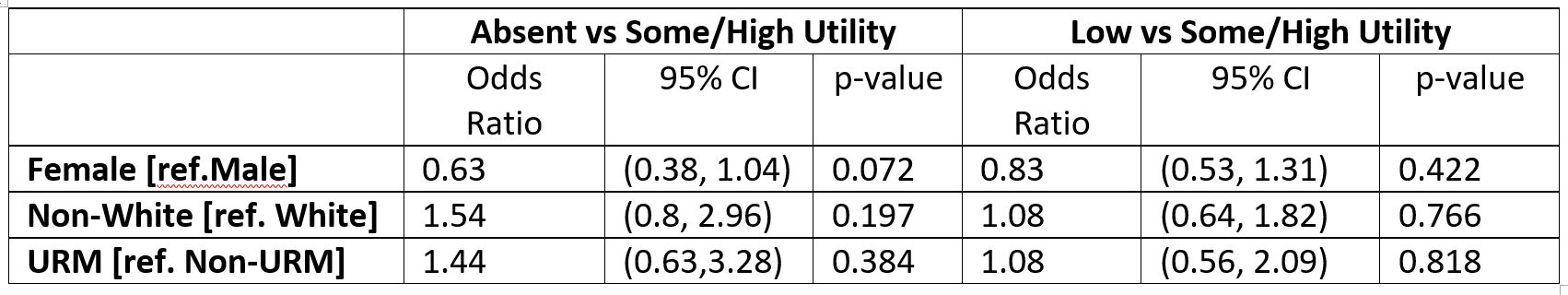

Three authors completed coding of themes for 100 randomly selected assessments, focusing both on previously identified language trends suggesting implicit bias and newly identified themes. A codebook for “comment utility” was created: absent comment, minimal utility (lacking suggestion for improvement or specific evidence of performance) and some/high utility. Another 1069 randomly selected comments were coded for comment utility independently by two authors. Differences in comment utility by demographics was examined by multinomial mixed effects regression.

Results:

17,061 EPA-based assessments for 280 students were included. 56% of students identified as female and 61% as White. In all 17,061 assessments, there was no significant difference in comment length (word count), use of the words “compet-“ (competence) or “confid-“ (confidence) based on gender or race. In a random selection of 1069 narrative comments, there was no significant difference in comment utility by gender or race.

Conclusion(s):

We were unable to detect evidence of implicit bias in 17,061 EPA-based assessments. This raises the question of whether an EPA-based assessment framework is less prone to implicit bias. The automatic attribution of particular qualities to a member of a certain social group lies at the heart of implicit bias. It is reasonable to question whether a competency/milestone-based assessment, focused on the demonstration of certain qualities or abilities, could be more susceptible to implicit bias.