Medical Education: Medical Student

Medical Education 2: Student 1

477 - There's an App for That: Using Technology to Advance Competency-based Medical Education

Publication Number: 477.123

.jpg)

Ryan Flaherty, DO (he/him/his)

Clinical Fellow

Children's of Alabama

Birmingham, Alabama, United States

Presenting Author(s)

Background:

As many medical schools transition towards competency-based medical education (CBME), there remains little consensus on best practices for assessment and monitoring of clinical skills during the clinical years. This is due in large part to the heterogeneity of the clinical learning environment, with marked variation in cases, observability, assessors, and time spent in each setting. We describe our EPA-based approach, paired with efficient, individualized data capture and monitoring systems, to make progress towards CBME monitoring in the clinical years.

Objective:

We describe an innovative approach to clinical skills assessment using an EPA framework and demonstrate the feasibility of implementing skills assessments and monitoring using novel digital tools and dashboards.

Design/Methods:

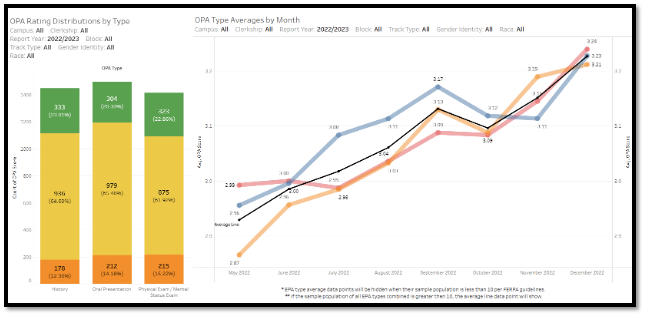

The UAB Heersink School of Medicine (UABHSOM) is a public medical school with ~800 students across 4 clinical campuses. We developed an electronic assessment app with monitoring dashboards (student, faculty and school level) and implemented a multi-tiered assessment consisting of observed professional activities (OPAs) and formative feedback forms (FFFs) across all campuses. OPAs consist of discrete observable history, exam, and oral presentation components using checklists and a modified Chen entrustment scale. FFFs are a workplace-based assessment providing structured, EPA-based feedback by specific domains of competency.

Results:

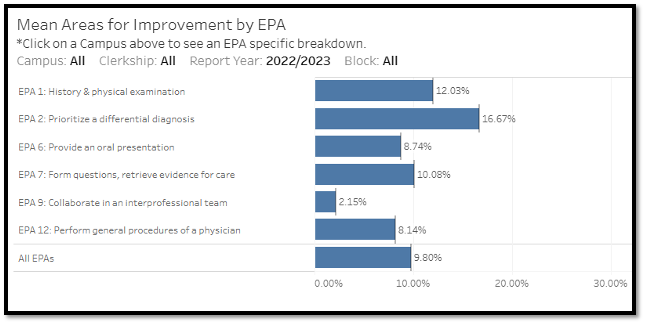

As of December 2022, students (n=229) had completed 4,355 OPAs and 271 during this academic year. From May to December the OPA average scores rose for History (2.96 to 3.23), Physical exam (2.99 to 3.24) and Oral presentation (2.87 to 3.21). Average OPA scores showed no difference by gender: 3.08 vs. 3.07 for male and female students, respectively (n=110 each). The percentage of EPA sub-competencies marked “need for improvement” on FFFs were: EPA 1 (H&P) 12%, EPA 2 (differential diagnosis) 17%, EPA 6 (oral presentation) 9%, EPA 7 (evidence for care) 10%, EPA 9 (interprofessional teams) 2% and EPA 12 (general procedures of a physician) 8%.

Conclusion(s):

This educational innovation shows the feasibility of implementing data-driven, competency-based skills tracking within a clerkship app. Over time, this data will allow us to define developmental curves to establish longitudinal competency growth and identify students in early need of additional intervention. The data also enables monitoring of student and evaluator characteristics that could suggest biases affecting competency development ratings.